Nearest neighbor attack: It sounds like something out of a spy thriller, right? But this isn’t some fictional plot; it’s a very real threat to the security of your machine learning models. These attacks exploit the inherent vulnerabilities in how these algorithms work, using cleverly crafted data to either steal information about your model or even extract the model itself. Think of it as a digital heist, where the thief doesn’t need to break into a server, but instead cleverly manipulates the system’s own logic against it. We’re diving deep into understanding how these attacks work, what makes models vulnerable, and most importantly, how to protect yourself.

This exploration will cover various types of nearest neighbor attacks, including membership inference attacks (where attackers figure out if a specific data point was used to train the model) and model extraction attacks (where they try to replicate your model entirely). We’ll look at the specific characteristics of datasets that make them more susceptible, and detail the step-by-step procedures of these attacks. Finally, we’ll examine defense strategies and explore real-world examples to paint a complete picture of this critical security concern in the age of AI.

Introduction to Nearest Neighbor Attacks

Nearest neighbor attacks, a sneaky type of adversarial attack, exploit the vulnerabilities inherent in machine learning models that rely on proximity-based classification. These attacks cleverly manipulate input data to misclassify it, causing the model to make incorrect predictions. Understanding these attacks is crucial for building robust and secure machine learning systems.

Nearest neighbor algorithms are a cornerstone of machine learning, known for their simplicity and intuitive approach to classification and regression. They function by assigning a data point to the class of its nearest neighbors in the feature space. The “nearest” is usually determined by a distance metric, like Euclidean distance. These algorithms are commonly used in applications ranging from image recognition and recommendation systems to anomaly detection and data clustering. Their straightforward nature makes them easily understandable, but also surprisingly vulnerable.

Vulnerable Datasets and Nearest Neighbor Attacks

Certain datasets are inherently more susceptible to nearest neighbor attacks than others. Datasets with high dimensionality, where features are numerous and potentially correlated, often prove more vulnerable. This is because a small change in a single feature can significantly alter the distance calculations, potentially moving a data point closer to a different class. High-dimensional data often suffers from the “curse of dimensionality,” where distances between points become less meaningful. For example, a facial recognition system using a high-dimensional feature vector representing pixel intensities could be easily tricked by subtle changes in the input image designed to alter its nearest neighbor. Similarly, datasets with overlapping class distributions, where data points from different classes are closely clustered together, are also highly susceptible. A small perturbation in a data point near the class boundary can easily cause misclassification. Consider a spam detection system trained on email text; if the spam and non-spam emails are very similar in terms of their word usage, an attacker could easily craft a spam email that looks very similar to legitimate emails, thereby bypassing the nearest neighbor classifier. Another example would be a credit scoring system; if the features defining good and bad credit scores are very close, small modifications to an individual’s credit profile could potentially move them from a “good” to a “bad” credit score.

Types of Nearest Neighbor Attacks

Nearest neighbor attacks exploit the inherent vulnerabilities of machine learning models that rely on proximity to classify data points. These attacks don’t directly target the model’s internal workings but rather manipulate input data to achieve a desired outcome, often misclassification or information leakage. Understanding the different types of these attacks is crucial for developing robust defense mechanisms.

These attacks can be broadly categorized based on their goals and methodologies. Two primary types stand out: membership inference attacks and model extraction attacks. While both leverage the model’s nearest neighbor properties, their objectives and techniques differ significantly.

Membership Inference Attacks

Membership inference attacks aim to determine whether a specific data point was part of the training dataset used to build the machine learning model. The attacker leverages the model’s predictions on carefully crafted inputs, looking for subtle differences in the model’s confidence or prediction behavior that might reveal the presence or absence of a specific data point in the training set. For example, if the model exhibits unusually high confidence when predicting the class of a potential training point, this might indicate that the point was indeed used during training. The effectiveness of this attack varies depending on the model’s complexity and the size of the training dataset. Simpler models with smaller datasets are generally more vulnerable. A successful membership inference attack could compromise the privacy of individuals whose data contributed to the model’s training. Consider a medical diagnosis model: if an attacker can infer whether a patient’s data was used in training, it could potentially reveal sensitive information about that patient’s health status.

Model Extraction Attacks

Model extraction attacks, on the other hand, go a step further. They aim to reconstruct or approximate the entire target machine learning model. The attacker strategically queries the model with various inputs and observes the corresponding outputs. By analyzing the pattern of these input-output pairs, the attacker can learn the model’s underlying decision boundaries and potentially create a replica of the model, or at least a close approximation. This replica could then be used for malicious purposes, such as bypassing security mechanisms or deploying the model in an unauthorized context. The effectiveness of model extraction attacks depends heavily on the model’s complexity and the number of queries the attacker can make. More complex models, naturally, present a greater challenge to extract, requiring a larger number of queries. Imagine a fraud detection model used by a bank. A successful model extraction attack could allow attackers to create a copy of the model, potentially used to bypass the bank’s fraud detection system.

Comparison of Attack Effectiveness

The effectiveness of both membership inference and model extraction attacks varies depending on factors like the model’s architecture (e.g., linear model vs. deep neural network), the size and characteristics of the training dataset, and the attacker’s resources. Generally, simpler models are more susceptible to both types of attacks. Deep learning models, while powerful, can also be vulnerable, especially if they haven’t been properly trained with privacy-preserving techniques. Moreover, the availability of a large number of queries significantly increases the attacker’s success rate in model extraction attacks. Membership inference attacks, while requiring fewer queries, may need a more sophisticated analysis of the model’s output to identify subtle patterns indicative of data membership. The trade-off lies in the information gained: membership inference provides limited information about individual data points, while model extraction aims for a complete replication of the model itself, potentially granting access to much more sensitive information.

Vulnerability Assessment

Source: abusix.com

Nearest neighbor attacks exploit vulnerabilities inherent in machine learning models, particularly those relying on distance metrics for classification or prediction. Understanding these vulnerabilities is crucial for developing robust and secure systems. A comprehensive vulnerability assessment helps pinpoint weaknesses and guides the implementation of effective mitigation strategies.

Factors contributing to a machine learning model’s susceptibility to nearest neighbor attacks are multifaceted and interconnected. These vulnerabilities stem from both the model’s design and the characteristics of the underlying data.

Dataset Characteristics and Attack Susceptibility

The size, dimensionality, and noise level of the training dataset significantly influence a model’s vulnerability. Smaller datasets offer fewer data points to establish robust decision boundaries, making them more susceptible to adversarial examples strategically placed to mislead the nearest neighbor algorithm. High dimensionality, often referred to as the “curse of dimensionality,” can lead to data sparsity, increasing the likelihood of an attacker finding a strategically positioned point that influences classification. Noisy data, containing inaccuracies or irrelevant features, can further weaken the model’s ability to discriminate between legitimate data points and adversarial examples. For instance, a facial recognition system trained on a small, noisy dataset of images might be easily fooled by an attacker who subtly modifies an image to alter its classification.

Methodology for Vulnerability Assessment

Assessing the vulnerability of a specific machine learning model requires a systematic approach. This involves analyzing the model’s architecture, the characteristics of the training data, and the potential impact of adversarial examples. A key aspect is to simulate attacks to observe the model’s response and quantify the impact. This process should consider different attack strategies and varying levels of adversarial perturbation. The results of this assessment should inform the selection of appropriate mitigation techniques.

Vulnerability Factors and Mitigation Strategies

| Vulnerability Factor | Impact Level | Mitigation Strategy | Example |

|---|---|---|---|

| Small Dataset Size | High | Data Augmentation, Synthetic Data Generation | Augmenting a small image dataset with rotated, flipped, or slightly modified versions of existing images. |

| High Dimensionality | Medium | Dimensionality Reduction Techniques (PCA, t-SNE), Feature Selection | Applying Principal Component Analysis (PCA) to reduce the number of features in a high-dimensional dataset before training a nearest neighbor model. |

| Noisy Data | Medium | Data Cleaning, Robust Distance Metrics | Employing a robust distance metric like Mahalanobis distance which is less sensitive to outliers compared to Euclidean distance. |

| Lack of Data Diversity | High | Careful Data Collection, Adversarial Training | Including a diverse range of data points during the training phase to ensure the model is robust against unexpected inputs. Adversarial training involves adding carefully crafted adversarial examples to the training data to improve model robustness. |

| Weak Distance Metric | Medium | Choosing an appropriate distance metric based on data characteristics | Using cosine similarity instead of Euclidean distance for high-dimensional data where angles are more meaningful than distances. |

Attack Methods and Procedures

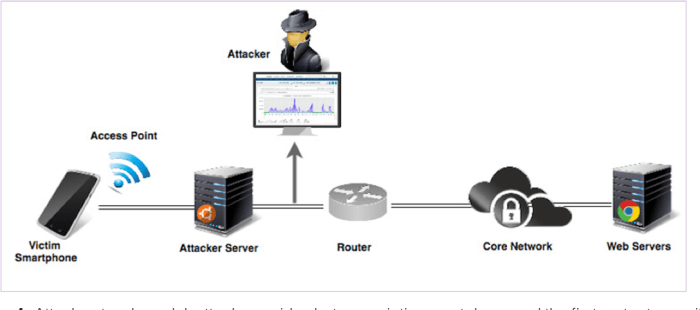

Source: cloudfront.net

Nearest neighbor attacks, while conceptually simple, offer surprisingly effective ways to compromise machine learning models. Understanding the specific procedures involved in these attacks is crucial for developing robust defenses. This section details the step-by-step methods for two common attack vectors: membership inference and model extraction.

Both attacks leverage the fundamental principle of nearest neighbor algorithms: finding the closest data points in a dataset. By cleverly querying the model and analyzing its responses, attackers can infer sensitive information about the training data or even replicate the model’s functionality.

Membership Inference Attack Procedure

A membership inference attack aims to determine whether a specific data point was part of the model’s training dataset. This is achieved by observing the model’s predictions on carefully crafted inputs. Successful inference can reveal sensitive information about individuals included in the training data, posing significant privacy risks.

- Generate Shadow Models: Train several models similar to the target model, using subsets of a publicly available dataset or synthetic data that mimics the characteristics of the target model’s training data.

- Create Test Data: Prepare a set of test data points, including some that were used in the shadow models’ training and some that were not.

- Query the Target Model: Submit the test data points as queries to the target model and record the model’s confidence scores for each prediction.

- Analyze Confidence Scores: Compare the confidence scores from the target model with those from the shadow models. A significantly higher confidence score for a data point in the target model suggests that the data point was likely part of the target model’s training data.

- Establish Threshold: Define a threshold based on the observed confidence score differences between data points present and absent in the shadow models’ training sets. Data points exceeding this threshold are classified as members of the target model’s training set.

Model Extraction Attack Procedure

Model extraction aims to recreate a functional copy of the target model. This is achieved by repeatedly querying the target model with carefully selected inputs and observing its outputs. The attacker then uses these input-output pairs to train a new model that mimics the behavior of the target model. This attack can lead to the complete replication of valuable intellectual property.

- Query Selection Strategy: Choose a method for selecting query inputs, such as random sampling, or a more strategic approach based on maximizing information gain (e.g., exploring the model’s decision boundary).

- Query the Target Model: Submit the selected inputs to the target model and record the corresponding outputs.

- Data Collection: Gather a substantial number of input-output pairs to provide sufficient data for training the extracted model.

- Train a Substitute Model: Use the collected input-output pairs to train a new model (e.g., a k-nearest neighbor model) that approximates the target model’s behavior.

- Evaluate Accuracy: Assess the accuracy of the extracted model by comparing its predictions to those of the target model on a separate test set. This determines the effectiveness of the extraction process.

Defense Mechanisms and Mitigation Strategies: Nearest Neighbor Attack

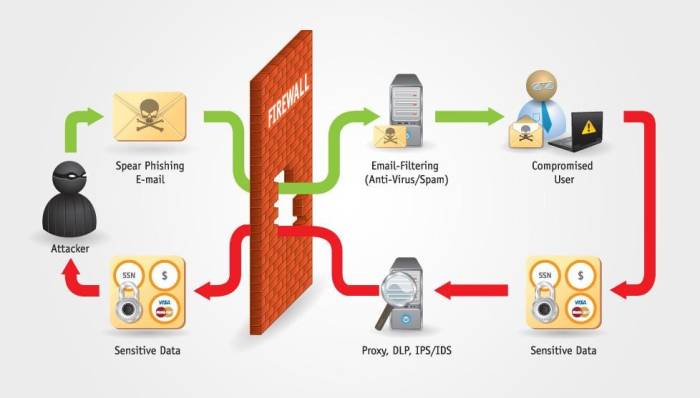

Source: medium.com

Nearest neighbor attacks, while powerful, aren’t invincible. Several defense mechanisms can significantly reduce their effectiveness, bolstering the security and privacy of your machine learning models. These strategies often involve a trade-off between enhanced security and potential impacts on model performance – a balance that needs careful consideration.

Effective defense mechanisms against nearest neighbor attacks hinge on making it harder for adversaries to manipulate the training data or exploit the model’s inherent vulnerabilities. This involves both proactive measures to strengthen the model and reactive measures to detect and mitigate attacks. The best approach often combines multiple techniques for a layered defense.

Data Preprocessing and Augmentation

Data preprocessing plays a crucial role in mitigating nearest neighbor attacks. Techniques like noise addition, data normalization, and feature scaling can make it more difficult for attackers to identify and manipulate influential data points. Data augmentation, creating synthetic data points similar to the existing ones, can also help to reduce the impact of poisoning attacks by diluting the effect of malicious samples. For example, adding Gaussian noise to the features of a training dataset can obscure subtle manipulations by an attacker. Similarly, normalization ensures that features with larger scales don’t disproportionately influence the distance calculations in the nearest neighbor algorithm.

Model Modifications and Enhancements

Modifying the core nearest neighbor algorithm itself can improve robustness. Instead of relying solely on the closest neighbor, using a k-nearest neighbor approach (where k > 1) can reduce the influence of individual malicious data points. Alternatively, weighted k-nearest neighbor methods, which assign weights to neighbors based on their distance, can further mitigate the impact of outliers, including poisoned data points. Consider a scenario where a malicious actor injects a single, highly influential data point. A standard nearest neighbor algorithm would be highly susceptible. However, a k-nearest neighbor algorithm, with k=5 for instance, would significantly reduce the impact of that single point by considering four other, legitimate neighbors.

Privacy-Preserving Techniques

Protecting the privacy of the training data is paramount. Differential privacy adds carefully calibrated noise to the model’s output, making it difficult to infer individual data points. Federated learning, where training happens on decentralized data sources without direct data sharing, also provides strong privacy guarantees. Homomorphic encryption allows computations to be performed on encrypted data, preventing unauthorized access to sensitive information. The use of federated learning, for example, prevents the central aggregation of sensitive training data, making it harder for attackers to access and manipulate it.

Defense Strategy Comparison

The following table compares different defense strategies, highlighting their strengths and weaknesses. The effectiveness of each strategy depends heavily on the specific attack scenario and the characteristics of the dataset.

| Defense Strategy | Strengths | Weaknesses | Performance Impact |

|---|---|---|---|

| Data Augmentation | Increases data diversity, reduces impact of poisoned points | Can increase computational cost, may not be effective against sophisticated attacks | Moderate decrease |

| Noise Addition | Obscures malicious data points, improves robustness | Can reduce model accuracy, needs careful parameter tuning | Moderate decrease |

| k-Nearest Neighbors | Reduces influence of outliers, improves resilience | Increases computational cost, choice of ‘k’ is crucial | Slight decrease |

| Differential Privacy | Strong privacy guarantees | Can significantly reduce model accuracy, requires careful parameter tuning | Significant decrease |

| Federated Learning | Strong privacy guarantees, prevents data aggregation | Increased communication overhead, can be slower to train | Moderate decrease |

Real-World Examples and Case Studies

Nearest neighbor attacks, while often theoretical in introductory discussions, have manifested in real-world scenarios with tangible consequences. Understanding these instances is crucial for appreciating the vulnerability’s practical implications and developing effective countermeasures. The following examples highlight the diverse sectors affected and the devastating impact of successful attacks.

Financial Sector Vulnerabilities

The financial sector, with its reliance on sensitive data and complex algorithms, is particularly vulnerable to nearest neighbor attacks. Consider a scenario where a malicious actor gains access to a subset of a bank’s customer data, including transaction history and credit scores. By strategically crafting a synthetic data point closely resembling a high-value customer, the attacker could potentially exploit the nearest neighbor algorithm used in fraud detection or credit scoring systems. This could lead to fraudulent transactions being approved or undeserved credit lines being granted, resulting in significant financial losses for the bank and its customers. The consequences can range from individual financial hardship to large-scale systemic instability.

Healthcare Data Breaches

In the healthcare industry, patient data is highly sensitive and protected by stringent regulations. However, nearest neighbor attacks could compromise this security. Imagine a scenario where an attacker uses a compromised medical record as a basis to create synthetic records for individuals, potentially gaining access to sensitive information like medical history, insurance details, or even genetic data. This information could be used for identity theft, medical fraud, or blackmail, causing severe harm to patients and eroding public trust in healthcare systems. The breaches could also result in significant financial penalties for violating data protection laws.

Security System Compromises

Nearest neighbor attacks can also be leveraged to bypass security systems. For instance, consider facial recognition systems used for access control. An attacker could potentially create a synthetic image very similar to an authorized individual’s face, thereby gaining unauthorized access. This could lead to physical security breaches, data theft, or even sabotage, depending on the specific context. The consequences of such an attack could range from minor inconvenience to significant financial losses and physical harm.

Application to Other Machine Learning Vulnerabilities

The principles underlying nearest neighbor attacks are applicable to other machine learning vulnerabilities. For instance, the concept of crafting adversarial examples, where subtle alterations to input data can drastically change the model’s output, shares similarities with the core methodology of nearest neighbor attacks. Similarly, model inversion attacks, which aim to reconstruct sensitive training data from the model’s output, also leverage the idea of finding similar data points to infer information about the original dataset. Understanding the vulnerabilities of nearest neighbor algorithms helps in identifying and mitigating similar risks in other machine learning models.

Future Research Directions

The field of nearest neighbor attack defense is far from settled. While significant progress has been made, several open questions remain, particularly as machine learning models become increasingly complex and prevalent. Future research should focus on developing more robust and adaptable defense mechanisms, as well as anticipating the evolution of attack techniques. This will require a multi-faceted approach, combining theoretical advancements with practical implementations and rigorous testing.

The sophistication of both attacks and defenses is likely to increase exponentially. We can expect more nuanced attacks exploiting subtle vulnerabilities in model architectures and training data, requiring equally nuanced and adaptive defenses. The arms race between attackers and defenders will continue to drive innovation in this critical area of cybersecurity.

Advanced Defense Mechanisms Against Evolving Attacks

Current defense mechanisms often struggle to keep pace with the rapidly evolving landscape of nearest neighbor attacks. Research is needed to develop adaptive defenses that can learn and adjust to new attack strategies in real-time. This might involve incorporating techniques from adversarial machine learning, anomaly detection, and reinforcement learning to create a more resilient security posture. For instance, a system could be designed to automatically identify and mitigate newly discovered attack vectors by analyzing attack patterns and adapting its defense strategies accordingly. This adaptive approach would be far more effective than static defenses that are easily bypassed by new attack methods. A promising area of research lies in developing defenses that are not only effective against known attacks, but also generalize well to unseen attacks, leveraging the inherent uncertainty and variability in real-world data.

Improved Model Robustness and Explainability

The inherent vulnerability of nearest neighbor models to adversarial examples stems, in part, from a lack of transparency and explainability. Research into improving the robustness and interpretability of these models is crucial. Techniques such as feature selection, data augmentation, and regularization can enhance model resilience, but more innovative approaches are needed. The ability to understand *why* a model makes a particular prediction allows for the identification of vulnerabilities and the development of targeted defenses. For example, a more explainable model might reveal that its predictions are unduly influenced by a specific, easily manipulated feature, allowing for a focused defense strategy. Further investigation into explainable AI (XAI) methods specifically tailored for nearest neighbor models is vital for building more secure systems.

Enhanced Data Preprocessing and Feature Engineering, Nearest neighbor attack

The quality and characteristics of training data significantly influence a model’s susceptibility to nearest neighbor attacks. Research into advanced data preprocessing and feature engineering techniques is critical for enhancing model robustness. This includes exploring methods to identify and mitigate noisy data, outliers, and adversarial examples during the training phase. For example, the development of algorithms that can automatically detect and remove adversarial examples from training datasets would significantly improve model security. Moreover, research into developing robust feature representations that are less susceptible to manipulation would be invaluable.

- Developing adaptive defense mechanisms that learn and evolve alongside new attack strategies.

- Improving the explainability and robustness of nearest neighbor models through advanced techniques like XAI and regularization.

- Investigating novel data preprocessing and feature engineering methods to mitigate vulnerabilities in training data.

- Exploring the application of differential privacy and other privacy-preserving techniques to protect model training data.

- Developing formal verification methods to mathematically prove the security of nearest neighbor models under specific attack scenarios.

Outcome Summary

So, we’ve journeyed into the shadowy world of nearest neighbor attacks, uncovering their mechanisms and the potential damage they can inflict. From understanding the vulnerabilities inherent in machine learning models to mastering the techniques used to compromise them, we’ve covered the spectrum. But remember, this isn’t just about fear-mongering; it’s about proactive defense. By understanding these threats, we can build more robust and secure machine learning systems, ensuring that the benefits of AI are realized without compromising sensitive data. The fight against these attacks is ongoing, a constant evolution of defense and offense, but with awareness and the right strategies, we can stay ahead of the curve.