Gabagool leveraging cloudflares r2 storage service – Gabagool Leveraging Cloudflare R2 Storage Service: Think you’ve got your data storage game on lock? Think again. This isn’t your grandpappy’s cloud; we’re diving deep into how Cloudflare’s R2 storage – a seriously speedy and scalable object storage service – can be the ultimate solution for your gabagool data needs. Forget clunky, expensive solutions; we’re talking streamlined efficiency and cost-effectiveness.

We’ll unpack everything from designing the perfect data schema for R2 to mastering data retrieval and implementing rock-solid security measures. We’ll cover the nitty-gritty details of uploading, optimizing, and managing your gabagool data, ensuring it’s not just stored, but thriving in the cloud. Get ready to level up your data game.

Gabagool Data Storage Needs

Source: co.jp

Storing gabagool data efficiently and securely is crucial for any business dealing with this delectable cured meat. The sheer volume of information – from production records to sales data, customer preferences, and even marketing campaigns – necessitates a robust and scalable storage solution. Cloud storage emerges as a clear winner, offering flexibility and cost-effectiveness compared to on-premise solutions.

Gabagool data, like any food product data, has specific characteristics that influence storage requirements. It’s not just about the weight and dimensions of the product itself; it’s about the entire lifecycle, from ingredient sourcing and processing to distribution and ultimately, consumption. This involves a diverse range of data types, including images, text, numbers, and potentially even sensor data from production facilities. This complexity necessitates a storage solution capable of handling varied data formats and sizes.

Ideal Storage Requirements for Gabagool Data

The ideal cloud storage solution for gabagool data must prioritize scalability, accessibility, and security. Scalability ensures the system can easily adapt to growing data volumes without performance degradation. As the gabagool empire expands, storage needs will increase proportionally. Accessibility ensures authorized personnel can readily access necessary data whenever needed, facilitating efficient operations and informed decision-making. Security is paramount, safeguarding sensitive information like recipes, supplier details, and customer data from unauthorized access or breaches. Robust encryption and access control mechanisms are vital.

Comparison of Cloud Storage Options for Gabagool Data, Gabagool leveraging cloudflares r2 storage service

Several cloud storage options exist, each with its own strengths and weaknesses. Amazon S3, Google Cloud Storage, and Azure Blob Storage are prominent contenders. The choice depends on factors like budget, required features, and integration with existing systems. For instance, Amazon S3 offers a wide range of features and is generally considered mature and reliable, but it can be more expensive than other options. Google Cloud Storage often boasts competitive pricing, while Azure Blob Storage offers strong integration with other Microsoft services. The most cost-effective option will depend on the specific needs and usage patterns of the gabagool business. A thorough cost-benefit analysis is crucial before selecting a provider. Consider factors like data transfer costs, storage class pricing, and request fees. For a small gabagool producer, a lower-cost option with tiered storage might be suitable. A larger enterprise might benefit from the advanced features and scalability of a more expensive solution.

Gabagool Data Schema for R2 Storage

Cloudflare’s R2 storage service offers a cost-effective solution for storing large amounts of data. To leverage R2 effectively, a well-structured data schema is crucial. A suitable schema might organize data using a hierarchical structure. For example, data could be organized by year, then month, then specific production batch. Each batch could contain subfolders for images of the gabagool, production logs, quality control reports, and sales data. File naming conventions should be clear and consistent, facilitating easy retrieval. For instance, `2024-10-26_Batch123_QualityReport.pdf` provides a clear indication of the file’s content and origin. This approach allows for efficient querying and retrieval of specific data points. Consider using JSON or CSV for structured data, ensuring compatibility with various analytical tools. For images, standard formats like JPEG or PNG are recommended.

Leveraging Cloudflare R2 for Gabagool

Source: tkjpedia.com

Choosing the right storage solution for your gabagool data is crucial. Scalability, cost-effectiveness, and reliability are key considerations. Cloudflare R2, a highly scalable and cost-effective object storage service, emerges as a strong contender, offering significant advantages over traditional solutions and other cloud storage providers. Its integration with the Cloudflare network provides speed and security benefits that are hard to match.

Cloudflare R2 Advantages for Gabagool Data Storage

Cloudflare R2 boasts several compelling advantages for storing gabagool data. Its low cost per gigabyte makes it a budget-friendly option, particularly beneficial for large datasets. The service’s global network ensures fast data access for users worldwide, minimizing latency and improving the overall user experience. Furthermore, R2’s robust security features, including encryption at rest and in transit, protect your valuable gabagool data from unauthorized access. Compared to other services like Amazon S3 or Google Cloud Storage, R2 often presents a more competitive pricing model, especially for infrequent access patterns. This makes it a particularly attractive option for archiving or storing less frequently accessed gabagool data.

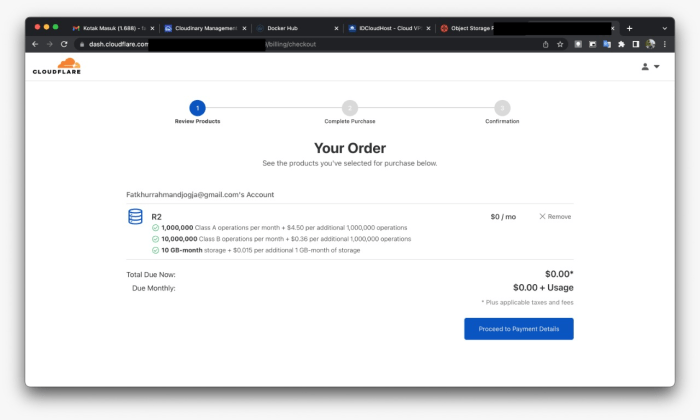

Uploading Gabagool Data to Cloudflare R2

Uploading your gabagool data to Cloudflare R2 is a straightforward process. First, you’ll need a Cloudflare account and an R2 bucket created. The following steps Artikel the process:

- Obtain Credentials: Access your Cloudflare dashboard and retrieve your access key ID and secret key for your R2 bucket. Keep these credentials secure; they grant full access to your data.

- Choose a Client Library: Cloudflare provides client libraries in various programming languages (Python, Node.js, Go, etc.). Select the library that best suits your development environment. The Python library, for example, offers convenient functions for interacting with R2.

- Write Upload Script: Using the chosen client library, write a script to upload your gabagool data. This script will handle authentication, file selection, and the actual upload process to your R2 bucket. Here’s a simplified Python example (error handling omitted for brevity):

import r2

# Replace with your actual credentials

access_key_id = "YOUR_ACCESS_KEY_ID"

secret_key = "YOUR_SECRET_KEY"

bucket_name = "your-gabagool-bucket"client = r2.Client(access_key_id, secret_key)

with open("gabagool_data.txt", "rb") as f:

client.put_object(bucket_name, "gabagool_data.txt", f)print("Gabagool data uploaded successfully!")

- Execute and Verify: Run your script. After successful execution, verify the upload by listing the objects in your R2 bucket through the Cloudflare dashboard or using the client library.

Optimizing Gabagool Data Storage in Cloudflare R2

Optimizing your gabagool data storage in Cloudflare R2 involves several best practices. Data compression before upload significantly reduces storage costs and improves upload/download speeds. Consider using efficient compression algorithms like gzip or brotli. Organize your data within the bucket using a logical folder structure to facilitate easier retrieval and management. Regularly review your data usage and purge outdated or unnecessary files to minimize storage costs. Leveraging lifecycle policies can automate the archival or deletion of data based on predefined rules. For example, you might archive less frequently accessed data to a cheaper storage class after a certain period.

Cloudflare R2 Key Features for Gabagool Data Management

| Feature | Description | Gabagool Relevance | Benefit |

|---|---|---|---|

| Low Cost | Competitive pricing per GB stored. | Reduces overall storage expenses. | Cost savings for large datasets. |

| Global Network | Fast data access from various geographical locations. | Ensures quick data retrieval for users worldwide. | Improved user experience and reduced latency. |

| High Scalability | Easily handles increasing data volumes. | Accommodates future gabagool data growth. | Future-proofs your storage solution. |

| Security | Data encryption at rest and in transit. | Protects sensitive gabagool data. | Enhanced data security and privacy. |

Data Retrieval and Management

Efficiently accessing and managing your gabagool data within Cloudflare R2 is crucial for a smooth operation. This section dives into the practical aspects of retrieving, updating, and troubleshooting your data, focusing on strategies to overcome potential challenges associated with large datasets. Remember, optimizing your data management directly impacts performance and scalability.

Retrieving gabagool data from Cloudflare R2 leverages their REST API. This allows for programmatic access, enabling you to integrate data retrieval seamlessly into your existing applications. You can use various programming languages like Python, Node.js, or Go to interact with the API, fetching data based on object keys or using prefixes for efficient retrieval of related data. For instance, you might organize your gabagool data by date or product type, using these categories as prefixes in your object keys for easier filtering.

Efficient Data Retrieval Methods

Efficient data retrieval hinges on proper key organization and the use of appropriate API calls. Employing prefixes in your object keys allows for targeted retrieval, avoiding unnecessary downloads of irrelevant data. Cloudflare R2 also supports range requests, enabling you to download only specific portions of a large file, significantly reducing bandwidth consumption. Batch operations, where possible, can further improve efficiency by retrieving multiple objects simultaneously.

Gabagool Data Update Strategies

Updating your gabagool data in Cloudflare R2 involves uploading new or modified files. Consider strategies to minimize downtime and ensure data consistency. Versioning your data allows you to track changes and revert to previous versions if needed. Using a consistent naming convention for your files and implementing a robust update process minimizes errors and ensures data integrity. Atomic operations, where possible, are crucial for maintaining consistency during updates, especially when dealing with concurrent access.

Challenges with Large Gabagool Datasets

Managing substantial volumes of gabagool data presents specific challenges. Network latency can become a significant factor, impacting retrieval times. Efficient data partitioning and the use of content delivery networks (CDNs) can mitigate this issue by distributing data closer to your users. Careful consideration of data organization and the use of metadata can improve search and retrieval performance. Regular data cleanup and archiving strategies are essential to maintain performance and reduce storage costs over time.

Error Scenarios and Solutions

Several error scenarios can arise when working with gabagool data in Cloudflare R2. Understanding these potential issues and their solutions is vital for a stable system. Below is a list of common problems and their recommended solutions.

| Error Scenario | Potential Cause | Solution |

|---|---|---|

| 404 Not Found | Incorrect object key or object does not exist. | Verify the object key and ensure the object has been uploaded correctly. |

| 403 Forbidden | Insufficient permissions or incorrect authentication. | Check API key permissions and ensure correct authentication credentials are used. |

| 500 Internal Server Error | A problem on Cloudflare R2’s side. | Retry the request after a short delay. Contact Cloudflare support if the issue persists. |

| Request Timeout | Large object size or network connectivity issues. | Use range requests for large objects. Improve network connectivity. |

Security and Access Control

Protecting your gabagool data—that precious, delicious digital information—requires a robust security strategy when using Cloudflare R2. This isn’t just about keeping prying eyes away; it’s about ensuring data integrity and availability, maintaining compliance, and preventing costly breaches. A well-designed security and access control system is paramount.

Cloudflare R2 offers several built-in security features, but a layered approach is always best. This involves combining Cloudflare’s inherent security with additional measures tailored to your specific needs and risk tolerance. We’ll explore the key components of such a system.

Security Measures for Cloudflare R2

Implementing robust security for your gabagool data in Cloudflare R2 involves multiple layers of protection. Firstly, leveraging Cloudflare’s network infrastructure itself provides a significant advantage. Their globally distributed network and DDoS mitigation capabilities offer inherent protection against many common threats. Beyond that, consider employing encryption both in transit (using HTTPS) and at rest (encrypting your data before uploading it to R2). Regular security audits and penetration testing are crucial to identify and address vulnerabilities proactively. Implementing strong access keys and rotating them regularly adds another layer of defense, preventing unauthorized access even if keys are compromised. Finally, maintaining up-to-date software and security patches for any related systems and applications is non-negotiable.

Access Control System Design

A well-structured access control system is essential for managing who can access your gabagool data and what actions they can perform. This system should be role-based, assigning different permissions based on user roles. For instance, “Administrators” might have full access, “Editors” could have read and write permissions, while “Viewers” might only have read-only access. This granular control prevents accidental or malicious data modification and ensures data integrity. Implementing a system of least privilege, granting users only the necessary permissions for their roles, is a best practice. This limits the potential damage from a compromised account. Regularly reviewing and updating user roles and permissions is vital to maintain security and adapt to changing needs.

Authentication Method Comparison

Several authentication methods can secure access to your gabagool data in Cloudflare R2. Using access keys, a straightforward approach, involves generating unique keys for each user or application. While simple, managing many keys can become complex. Alternatively, integrating with an existing identity provider (IdP) like Okta or Auth0 provides a more centralized and manageable authentication solution. This leverages existing user accounts and simplifies user management. Another option is using short-lived access tokens, which automatically expire after a set period, significantly reducing the risk of compromised credentials. The best method depends on your specific needs and infrastructure, balancing security with usability and management overhead. Consider factors like scalability, integration complexity, and security requirements when making your choice.

Secure Data Flow Architecture

Imagine a visual representation: Data originates from your application, securely encrypted using HTTPS. This encrypted data travels through Cloudflare’s globally distributed network, protected by their DDoS mitigation and security features. The data then reaches Cloudflare R2, where it’s stored encrypted at rest. Access to this data is controlled through a central authentication system (like an IdP), verifying user identities before granting access based on predefined roles and permissions. All communication between the application, Cloudflare’s network, and R2 is encrypted, ensuring confidentiality and integrity. Regular security monitoring and logging mechanisms track all access attempts and data modifications, providing an audit trail for accountability and incident response. This layered approach ensures that your gabagool data remains safe and accessible only to authorized users.

Cost Optimization and Scalability

Storing gabagool data efficiently and cost-effectively is crucial for any business. Cloudflare R2 offers a compelling solution, but understanding how to optimize costs and scale effectively is key to maximizing its benefits. This section explores strategies for minimizing storage expenses and ensuring your gabagool data remains accessible and readily available as your needs grow.

Cloudflare R2’s pricing model is based on storage used and data retrieval. By implementing smart strategies, you can significantly reduce your overall expenditure while ensuring your data remains easily accessible. Understanding your data’s lifecycle and utilizing features like lifecycle policies are essential components of a robust cost-optimization plan.

Storage Cost Optimization Techniques

Several methods exist to control storage costs. These techniques focus on reducing unnecessary storage and leveraging R2’s features to their fullest potential.

- Data Compression: Compressing your gabagool data before uploading it to R2 reduces storage space, directly impacting your monthly bill. Common compression algorithms like gzip can significantly decrease file sizes without impacting data integrity.

- Data Deduplication: Identifying and removing duplicate data before storage saves substantial space. This is particularly effective if your gabagool data contains redundant information or multiple versions of the same files.

- Lifecycle Policies: R2 allows you to define lifecycle policies that automatically move or delete data based on age or other criteria. For instance, you might archive less frequently accessed data to a cheaper storage tier after a certain period, or automatically delete temporary files after a set timeframe.

- Storage Class Selection: Understanding the different storage classes offered by R2 (if available) is crucial. Choosing the appropriate class for your data based on access frequency (e.g., frequently accessed data in a faster, potentially more expensive tier, versus infrequently accessed data in a cheaper, slower tier) can significantly influence your costs.

Scaling Gabagool Data Storage

As your gabagool data grows, ensuring seamless scalability is paramount. R2’s design allows for effortless scaling, but proactive planning is essential to avoid performance bottlenecks or unexpected cost spikes.

Consider these scaling strategies:

- Horizontal Scaling: R2 inherently scales horizontally. As your data volume increases, R2 automatically handles the increased load without requiring manual intervention or complex configurations. This ensures consistent performance even with massive data growth.

- Data Partitioning: For extremely large datasets, partitioning your gabagool data into smaller, manageable chunks can improve retrieval speeds and overall system performance. This strategy helps distribute the load across multiple servers, enhancing scalability and responsiveness.

- Monitoring and Adjustment: Continuous monitoring of storage usage and performance metrics is vital for proactive scaling. By tracking key indicators, you can identify potential bottlenecks and adjust your storage strategy accordingly, ensuring your system remains efficient and cost-effective as your data expands.

Monitoring and Analyzing Storage Costs

Regularly monitoring and analyzing your R2 storage costs provides invaluable insights into your spending patterns and allows for proactive cost management.

Here’s how to monitor and analyze your costs:

- Cloudflare Dashboard: The Cloudflare dashboard provides detailed reports on your R2 storage usage and costs. These reports allow you to track your spending over time, identify trends, and pinpoint areas for optimization.

- Automated Alerts: Set up automated alerts to notify you when your storage usage or costs exceed predefined thresholds. This proactive approach enables timely intervention and prevents unexpected cost overruns.

- Cost Allocation: If you’re using R2 for multiple projects or teams, implement a cost allocation strategy to track storage costs per project or team. This enables better budgeting and resource allocation.

Impact of Data Lifecycle Management

Implementing a robust data lifecycle management strategy is crucial for minimizing storage costs. By strategically managing the lifecycle of your gabagool data, you can reduce storage consumption and optimize your overall expenditure.

For example, a company might archive less frequently accessed marketing data to a cheaper storage tier after six months, and automatically delete temporary files after 30 days. This strategy ensures that only actively used data resides in the more expensive, higher-performance storage tiers, leading to significant cost savings over time. This approach ensures that data is readily available when needed, while also optimizing storage costs by minimizing the amount of expensive storage used.

Outcome Summary: Gabagool Leveraging Cloudflares R2 Storage Service

Source: sapphic.moe

So, there you have it: a comprehensive guide to harnessing the power of Cloudflare R2 for your gabagool data. From initial setup and optimization to advanced security and cost management, we’ve covered the essentials to ensure your data is safe, secure, and readily accessible. Remember, choosing the right cloud storage isn’t just about ticking boxes; it’s about future-proofing your data strategy. With Cloudflare R2, you’re not just storing data; you’re building a scalable, robust, and cost-effective foundation for the future.